Here’s a scary thought – internet usage is the fastest growing phenomenon in the history of mankind, and the most used website on the internet is Google. In fact, the typical internet user (billions of them) go to Google and ask it questions several times a day. Google obliges and in return lists some websites based on a secret algorithm. This gives you a sense of just how much power lies in the Google algorithm and why it is secret.

Is it any wonder that there is an entire industry (search engine optimization) dedicated to figuring out this algorithm? Is it any wonder that millions of businesses are tweaking their websites based on the latest information about the algorithm? Sure, most of this information is speculative, but get an entire industry of people trying to figure it out, and you start seeing some good educated guesses.

What exactly is a search algorithm?

Google isn’t the only game in town. There are quite a few search engines on the Internet these days. Even though Google has an unprecedented hold over the market, it isn’t the only search engine based on a ranking algorithm. In fact, all search engines today are based on a search algorithm.

A search algorithm is a procedure that involves multiple predetermined steps. All of the steps in a search algorithm are based on going through massive amounts of data and analyzing or sorting it based on specific properties. The specific properties are then linked to specific search phrases. These are the phrases we use when searching with Google. So basically, a search algorithm is the “machinery” that returns relevant results whenever we perform a Google search.

The Google Algorithm

The World Wide Web is made up of 60 trillion individual pages. Google, like other search engines, provides us with search results by first building an index of all these websites, and then sorting them based on different criteria. In order to index all 60 trillion of these webpages, Google does what’s known as “crawling the web” and building this global database of websites.

Crawling the web simply means following links from page to page. Crawled pages are sorted by factors like their content and then tracked in the Google Index. Google Search uses several different programs and formulas to sort through the Index and deliver results relevant to our search. These programs and formulas are part of the Google Algorithm.

When we try to search Google for something, the algorithms analyze what we have typed in and try to find the best results. Some web pages, images or videos come ahead of others in the search results. This is because Google has special algorithms that use over 200 factors when ranking the results. Some of these factors include the quality of the website, how recent the content is, or even factors centered around the geographical location and web history of the user.

Brief summary of Google Algorithms

Google uses many algorithms to provide us with the results for our searches. With more than 200 factors for selecting and ranking the search results, Google’s ways of processing and serving data are indeed complicated. Here’s a short breakdown of the most important updates to the Google algorithm.

- PageRank

Google’s PageRank is the first and best known algorithm in terms of ranking websites in the search results. PageRank was developed in 1996 by the founders of Google, Larry Page and Sergey Brin, as part of a research project. This algorithm turned out to be a big factor in Google’s rise to success. PageRank, as the name suggests, is the algorithm used to calculate the importance of a website. To do that, PageRank takes into account the quality and number of links that lead to a page. PageRank was based on the premise that more important websites have more backlinks from other websites.

- Google Panda

Eventually people figured out how to “game the system” and trick the original pagerank algorithms through building links in unethical ways. Google had to adapt and make big changes to the algorithm. Google panda was the first algorithm update to massively disrupt the entire industry. First released in February 2011, this algorithm is a kind of a filter that aims to decrease the rankings of low-quality websites in order to increase the quality of the search results. The release of Google Panda affected many websites’ search rankings and challenged webmasters to increase the quality of website content. Google even provided some guidance on their blog about the evaluation of website quality and how to improve said quality.

- Google Penguin

Google Penguin is a Google search algorithm update first announced in April 2014. The main purpose to releasing this update was to fight websites that violate Google’s Webmaster Guidelines, especially search spam. More specifically, this update is aimed at websites using malicious techniques for artificially increasing a website’s rank. These techniques, such as black-hat SEO, involve manipulating the number of backlinks and stuffing metadata with irrelevant keywords and false information.

- Google Hummingbird

Released in September 2013, Google Hummingbird is considered one of the major updates to Google’s search algorithm. The underlying idea behind the Hummingbird update was to make user interactions with the search engine more human. With Hummingbird, Google tried to help the search engine better understand the relationship between search keywords and the meaning and concepts behind phrases. Google Hummingbird intends to deliver better search results by focusing on semantics, namely contextual and conversational search. This update improves search results’ relevance, as well as the ease of searching, which is especially useful in voice search.

Search algorithms and navigating the World Wide Web

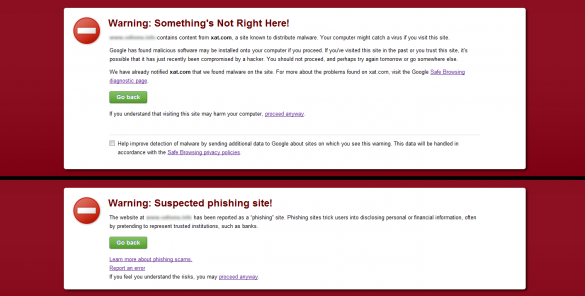

The number of web pages on the Internet is growing faster than ever. Even if there weren’t 60 trillion webpages, it would still be virtually impossible to effectively navigate the World Wide Web without the use of search engines. Google’s search engine is the most widely used search engine for a reason. Its sophisticated search algorithms aim at providing us with relevant and high quality content, while also saving us from the risks of visiting potentially malicious websites.

The various updates to the Google search algorithm are changing the way users access websites, but also the way webmasters handle SEO. Search engines no longer operate with keywords alone. With the rise of semantic search, machines are starting to parse and interpret human language better than ever. This means that they are much better able to tell how useful content is to humans.

This is why search engine optimization now has an entire new variable to worry about – high quality useful content. You can use every other major SEO tool (like building backlinks, optimizing keywords etc), but if your content sucks, you won’t get far. Modern algorithms virtually guarantee this.

This doesn’t mean that SEO as we know it will disappear, you still need to consider links and keywords, but there are major changes about to take place. There will be changes in the way users search for and find information, but also in the way webmasters go about getting better rankings.